Xamarin.Forms Face Recognition

Hello, Artificial Intelligence applications is getting better day by day and while this area is developing, I would like to add AI properties to the my applications. Thanks to Azure, We can easily add the AI and Machine Learning’s API to our applications. I am going to write articles about the using Cognitive Services in Xamarin.Forms application in the coming days and this article will be about the implementation of the Face Recogintion in Xamarin.Forms apps. Azure have different type of subscriptions and I use azure for students for the use like me click here.

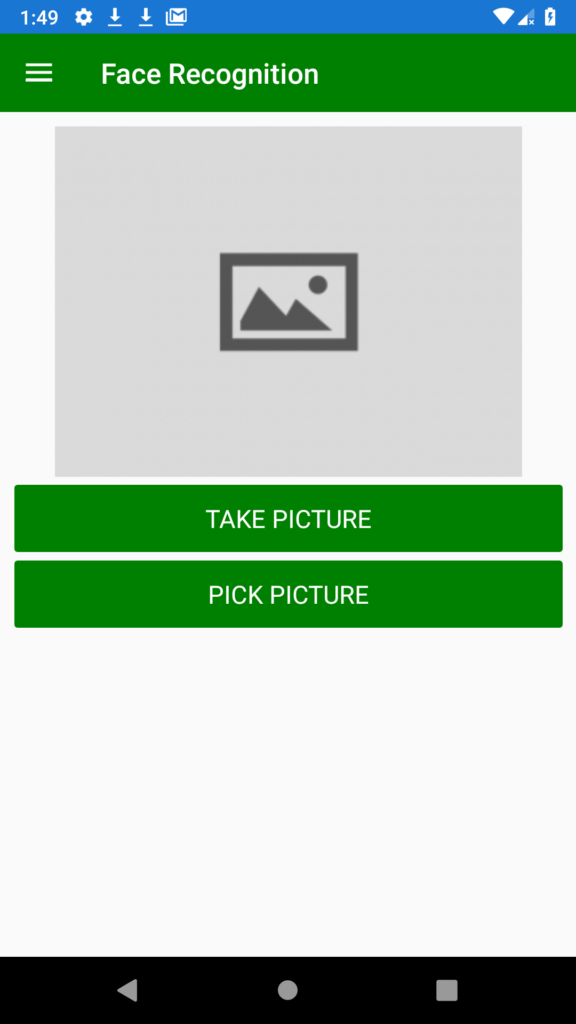

Firstly, Go to Azure and create a Face Recognition API. It is really easy to do(If you can not create ask me). Create a Xamarin.Forms blank application and after the application created, I added a page which name is FaceRecognitionPage and then i made the design of this page.

<StackLayout Margin="10">

<Image HeightRequest="250"

Source="defaultimage"

x:Name="myImage"/>

<Button Text="Take Picture"

FontSize="Medium"

BackgroundColor="Green"

TextColor="White"

Clicked="ButtonTakePicture_Clicked"/>

<Button Text="Pick Picture"

FontSize="Medium"

BackgroundColor="Green"

TextColor="White"

Clicked="ButtonPickPicture_Clicked"/>

<ActivityIndicator Color="Green"

VerticalOptions="Center"

HorizontalOptions="Center"

IsVisible="False"

x:Name="myActivitIndicator"/>

<Label x:Name="labelGender"

FontSize="Medium"

VerticalOptions="Center"

HorizontalOptions="Center"

FontAttributes="Bold"

TextColor="Black"/>

<Label x:Name="labelAge"

FontSize="Medium"

VerticalOptions="Center"

HorizontalOptions="Center"

FontAttributes="Bold"

TextColor="Black"/>

</StackLayout>

I will try to make samples of the Cognitive Services, For that reason my app design is master-detail page. If you are copy and paste the codes above you will have the same design. Design part was easy, now we should make the backends. While this project, I followed the Microsoft documentation. For the reach documentation click here. For the take and pick photo process, I already wrote an article about that you can see by clicking here. We should not forget the add the Newtonsoft.Json and Xam.Plugin.Media nuget packages to our project.

If you look at the documentation, you can see the JSON codes at the end of the page. We should convert these JSON to the C#. For that copy the JSON code and then click here paste the codes and convert to C#. Then take the C# codes and create a class inside of the our project. I created a class which name is FaceModel. Do not forget to change RootObject to the FaceModel.

public class FaceModel

{

public string faceId { get; set; }

public FaceRectangle faceRectangle { get; set; }

public FaceAttributes faceAttributes { get; set; }

}

public class FaceRectangle

{

public int top { get; set; }

public int left { get; set; }

public int width { get; set; }

public int height { get; set; }

}

public class HeadPose

{

public double pitch { get; set; }

public double roll { get; set; }

public double yaw { get; set; }

}

public class FacialHair

{

public double moustache { get; set; }

public double beard { get; set; }

public double sideburns { get; set; }

}

public class Emotion

{

public double anger { get; set; }

public double contempt { get; set; }

public double disgust { get; set; }

public double fear { get; set; }

public double happiness { get; set; }

public double neutral { get; set; }

public double sadness { get; set; }

public double surprise { get; set; }

}

public class Blur

{

public string blurLevel { get; set; }

public double value { get; set; }

}

public class Exposure

{

public string exposureLevel { get; set; }

public double value { get; set; }

}

public class Noise

{

public string noiseLevel { get; set; }

public double value { get; set; }

}

public class Makeup

{

public bool eyeMakeup { get; set; }

public bool lipMakeup { get; set; }

}

public class Occlusion

{

public bool foreheadOccluded { get; set; }

public bool eyeOccluded { get; set; }

public bool mouthOccluded { get; set; }

}

public class HairColor

{

public string color { get; set; }

public double confidence { get; set; }

}

public class Hair

{

public double bald { get; set; }

public bool invisible { get; set; }

public List<HairColor> hairColor { get; set; }

}

public class FaceAttributes

{

public double smile { get; set; }

public HeadPose headPose { get; set; }

public string gender { get; set; }

public double age { get; set; }

public FacialHair facialHair { get; set; }

public string glasses { get; set; }

public Emotion emotion { get; set; }

public Blur blur { get; set; }

public Exposure exposure { get; set; }

public Noise noise { get; set; }

public Makeup makeup { get; set; }

public List<object> accessories { get; set; }

public Occlusion occlusion { get; set; }

public Hair hair { get; set; }

}

After the adding class, we can go the .cs part of the our page and finish the process. We use the Subscription Key and End-Point Url so you should copy and paste these. Look the below codes and copy then paste to your project then Face Recognition will be work. I just use the gender and age properties, you can use other specifications.

public partial class FaceRecognitionPage : ContentPage

{

MediaFile mediaFile;

const string subscriptionKey = "Key";

const string uriBase = "End-Point";

public FaceRecognitionPage()

{

InitializeComponent();

}

private void ButtonTakePicture_Clicked(object sender, EventArgs e)

{

TakePicture();

}

private void ButtonPickPicture_Clicked(object sender, EventArgs e)

{

PickPicture();

}

//Analysis of the Picture

public async void MakeAnalysisRequest(string imageFilePath)

{

myActivitIndicator.IsVisible = true;

myActivitIndicator.IsRunning = true;

HttpClient client = new HttpClient();

client.DefaultRequestHeaders.Add("Ocp-Apim-Subscription-Key", subscriptionKey);

string requestParameters = "returnFaceId=true&returnFaceLandmarks=false" +

"&returnFaceAttributes=age,gender,headPose,smile,facialHair,glasses," +

"emotion,hair,makeup,occlusion,accessories,blur,exposure,noise";

string uri = uriBase + "?" + requestParameters;

HttpResponseMessage response;

byte[] byteData = GetImageAsByteArray(imageFilePath);

using (ByteArrayContent content = new ByteArrayContent(byteData))

{

content.Headers.ContentType = new MediaTypeHeaderValue("application/octet-stream");

response = await client.PostAsync(uri, content);

string contentString = await response.Content.ReadAsStringAsync();

//Display Result

List<FaceModel> faceModels = JsonConvert.DeserializeObject<List<FaceModel>>(contentString);

if(faceModels.Count != 0)

{

labelGender.Text = "Gender : " + faceModels[0].faceAttributes.gender;

labelAge.Text = "Age : " + faceModels[0].faceAttributes.age;

}

}

myActivitIndicator.IsVisible = false;

myActivitIndicator.IsRunning = false;

}

public byte[] GetImageAsByteArray(string imageFilePath)

{

using (FileStream fileStream =

new FileStream(imageFilePath, FileMode.Open, FileAccess.Read))

{

BinaryReader binaryReader = new BinaryReader(fileStream);

return binaryReader.ReadBytes((int)fileStream.Length);

}

}

//Taking Picture Process

async void TakePicture()

{

await CrossMedia.Current.Initialize();

if (!CrossMedia.Current.IsTakePhotoSupported || !CrossMedia.Current.IsCameraAvailable)

{

await DisplayAlert("ERROR", "Camera is NOT available", "OK");

return;

}

mediaFile = await CrossMedia.Current.TakePhotoAsync(new StoreCameraMediaOptions

{

Directory = "Sample",

Name = "myImage.jpg"

});

if (mediaFile == null)

{

return;

}

myImage.Source = ImageSource.FromStream(() =>

{

return mediaFile.GetStream();

});

MakeAnalysisRequest(mediaFile.Path);

}

//Picking Picture Process

async void PickPicture()

{

await CrossMedia.Current.Initialize();

if (!CrossMedia.Current.IsPickPhotoSupported)

{

await DisplayAlert("ERROR", "Pick Photo is NOT supported", "OK");

return;

}

var file = await CrossMedia.Current.PickPhotoAsync();

if (file == null)

{

return;

}

myImage.Source = ImageSource.FromStream(() =>

{

var stream = file.GetStream();

return stream;

});

MakeAnalysisRequest(file.Path);

}

}

If you can ask your question via e-mail or comments, i will be happy. In the next article, I will try to make Text Analytics. Keep working 🙂